So, at the very beginning of quarantine actions here in Finland, we started talking with friends about how it would effect small artists and performers. The event and entertainment industry has surely taken a hard hit. Festivals are essentially cancelled and anyone who can is trying to transition to some activity online. We had an idea from the start, that we should build a platform that allows artists and performers to stream live, but also gives the people watching a sense of community. There are still features to develop, but I can now say that we have what looks to be a robust design for a streaming platform.

I'll explain a bit about my design and initial results from testing. There are some solutions for building your own streaming server, and it is quite a complex task in general. I wanted to build a system on reliable components that are known to work in these situations and can perform under high loads. I prefer to work with open source tools, as it allows me a lot of freedom to configure and combine solutions that have been developed and tested for some time. Open source tools can often be designed to do very specific tasks and they tend to be implemented very well. There is a good reason that open source solutions dominate the server world. Nginx and Apace cover just over half of the web server market. It also seems most supercomputers are based on linux. Though it seems that reported statistics differ wildly, especially on the web server side.

Containers

I have found container based solutions to be extremely useful in building servers and especially when developing services. Systems like Docker enable building software components in their own contained environments under the control of the OS, sitting right on the Kernel. This allows the main components of services to exist in their own environments that are just right for each of them, while sharing the main OS resources with very little overhead. This way you can build almost any system to be modular and redundant. Each part can be changed or developed on its own and updates can run very fast. There is also a great benefit for security, as things like databases are isolated in their own containers. So even if someone hacks your front-end, they will find themselves trapped in a much simpler environment that does not hold the important files with user information or other data.

Many VPS providers and server platforms, notably Amazon AWS, offer Docker container compatibility. This means you can develop in house and deploy to the cloud.

I may write more about containers in the future, but now you should at least be familiar with the concept.

Server design

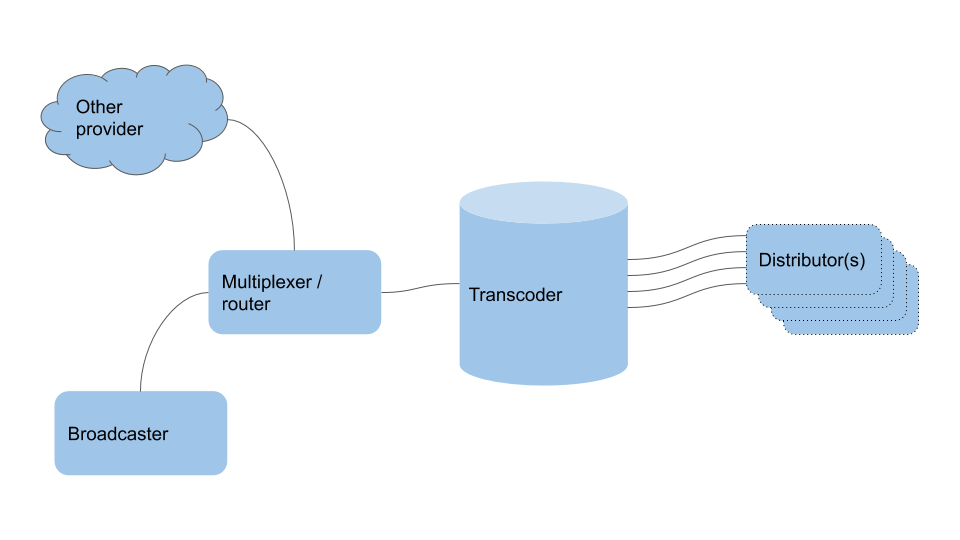

So other than isolating each function into its own container. Some parts of the service require high reliability and the resource requirements are very different. First let's look at an example diagram of a simple server setup for a live streaming service.

So there are the essential components that, in my opinion, are sensible to separate into actually separate servers, be they dedicated or VPSes. Let's go over the main components in the picture and their purposes.

- Broadcaster - This component encodes the stream, usually on location and sends it over a local network connection to the streaming servers. This is often duplicated for professional events. Can be something as simple as a laptop with OBS, an integrated solution or a full set of redundant encoder servers.

- Multiplexer - This part receives the stream and can route it to multiple services for further processing or distribution. This is a rather light activity on resources but it is critical and offers flexibility. Can be duplicated and some software supports backup stream servers.

- Transcoder - This server receives the raw high quality stream, encodes it into several streams and formats of varying quality. Usually from 1080p to 720,640,480 and 360, as you may have seen on YouTube for example. This requires some memory and a lot of CPU resources. This is critical as it encodes the video for HTML5 based distribution like HLS or DASH. This server can be replicated as well. (Though that requires more complex routing on the next step)

- Distributor - This server does as its name suggests and serves the video fragments to the users. There is often a commercial CDN in between distributor server and the user in serious solutions. The CDN helps reduce bandwidth use on the server side. The distribution server requires a lot of bandwidth to serve multiple users and usually caches data in memory to increase speed. The distributor is more like a cache than a web server as it does not host content for long, but is there to ease the load on the back-end servers.

I have omitted the web server that serves the client site and any socket servers that are used for other parts of the experience. That can be hosted anywhere as long as you take care of CORS headers etc.

Important things to consider

Things to consider when building this kind of system include, reliability, flexibility, usability, scalability and compatibility, to name a few. Here are some tips on how to approach these topics.

- Build on stable components. The industry already exists and many of the good solutions are publicly available.

- Think modular. Separating critical components decreases likelihood of catastrophic failure, especially with redundant systems. This also applies to containerized components that can be replicated within or throughout the "physical" servers. This also simplifies debugging as things tend to fail as smaller units.

- Know your DevOps. That way you can handle even larger development projects as an individual or group.

- Use established formats. Choosing HLS or DASH allows for most clients like phones and common browsers to support playing your content. And as you can see, there are widely adopted solutions that the industry uses in building their services.

- Design for scalability. The simplest way to expand user facing capacity in the above picture is to create and run more distribution servers. You need to take into account that people should be routed to caches that feed from the same trans-coding server to not receive inconsistent versions of files. This can be done with a hash based load balancer setup. Each level of service can be replicated to a different degree and distribution can be spread geographically as well.

Results and experiences

We had our first test run of the server with nearly 100 friends of mine. The servers I built are deployed by a script and I mostly need to set DNS and data routing to have everything ready. So I deployed the servers in the morning and kept polishing the front-end HTML site until we started the stream. We started streaming and using both platforms for redundancy. At some point the YouTube stream got blocked and people moved to my servers completely. The main load for the 3h test was between 15 and 50 users. A majority was using the raw source stream at 4Mbps/user. This kept a less than 1% CPU on the distribution end, a stable sustained load on the transcoder that was left to do it's job, and peak bandwidth on the single stream distributor was around 20% of capacity. The system successfully divider resource use between the servers and balanced it in a way where client side demand can not overload the transcoder (that is still serving some content). For a professional gig I would duplicate more of the servers, but overall the performance was stable on the first run. The system is routed in a mix of public and private networks, supports encrypted tunnels and has TLS with CORS and other security related header enabled.

Next steps will be adding more redundant servers and tuning the stream format for a more optimized bit rate. Then we start testing and developing more interactions for the platform, on top of the stream and chat that the MVP has.

Sources

- https://en.wikipedia.org/wiki/Usage_share_of_operating_systems#Public_servers_on_the_Internet

- https://news.netcraft.com/archives/2019/04/22/april-2019-web-server-survey.html

- https://www.docker.com/

- https://hls-js.netlify.app/api-docs/

- https://en.wikipedia.org/wiki/DevOps

- https://en.wikipedia.org/wiki/HTTP_Live_Streaming

- https://developer.mozilla.org/en-US/docs/Web/HTTP/CORS